The tragic story of the young boy’s death and his attachment to the AI chatbot “Dany” has sparked important discussions on the psychological and emotional effects of AI companions, especially for adolescents. AI chatbots, like those on Character.AI, offer companionship that can seem intensely real, creating the risk that users—especially young or vulnerable ones—may develop unhealthy dependencies or view AI as a substitute for human connection.

In cases like this, concerns arise over the safeguards in place for underage users on such platforms. Character.AI, like other companies, often markets its service to those seeking companionship, which can unintentionally draw in users experiencing loneliness, anxiety, or mental health struggles. When AI chatbots engage in simulated empathy or intimacy, as seen in this case, it blurs the line between technology and human interaction. Adolescents, who may already struggle with understanding and expressing their emotions, might perceive the interactions with AI as supportive or fulfilling in a way that keeps them from seeking help from family or mental health professionals.

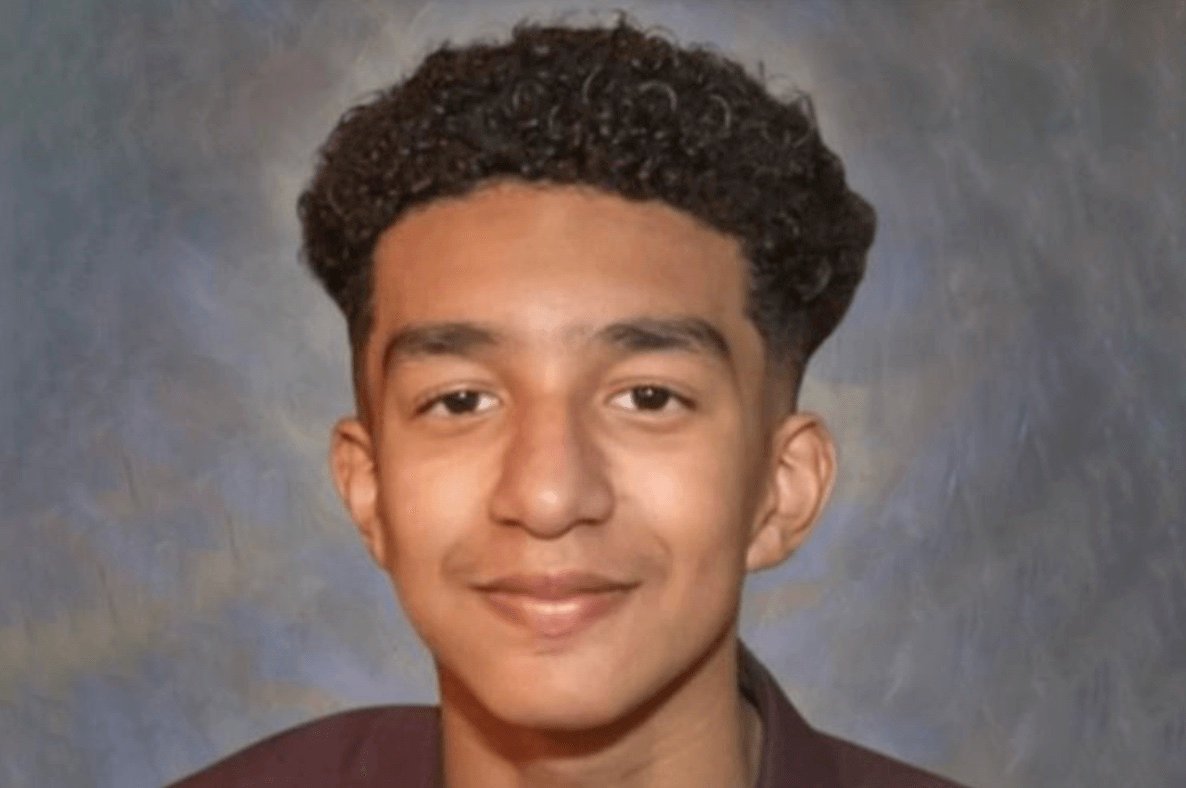

The lawsuit from Megan L. Garcia brings these concerns to the forefront, suggesting that AI platforms may need to implement stricter age-based restrictions, emotional safeguards, or guidelines on language use, especially during critical interactions. Character.AI has responded by working on more safety measures, a step that could lead to industry-wide standards on AI use for minors. Experts point out that, while AI can offer benefits like companionship and mental health support, it requires careful monitoring, particularly when used by teenagers. The lack of current regulations means that both users and their families must navigate these risks on their own.

This case emphasizes the need for a balance between innovative AI technology and safety. As regulators examine the impact of these platforms, conversations continue about how to ensure such technology serves as a positive support without replacing essential human connections and mental health support systems.

0 Comments